Episode 56

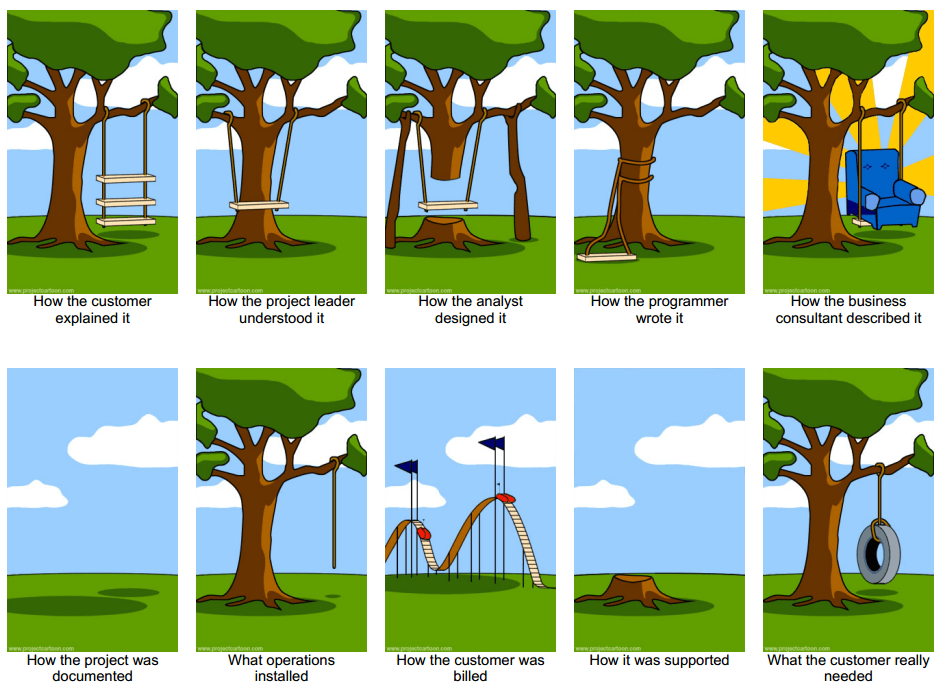

A day does not go by without a news article covering the latest high profile security breach with thousands to millions of users or customers impacted. With data being the commodity of the 21st century there is a war over its control and access. Unfortunately, companies often only take security seriously after an incident has occurred, a proactive administrator may ask to have “all the security”. However, a maximumly secure system may come at a significant cost to useability, fostering a culture where users find workarounds to complete their tasks.

A few months ago, I needed to go into the office for the first time in slightly over a year. Walking into the time capsule that was my cubicle, I was met with a security policy that required me to reset my password. We use two factor authentication, so off I went resetting the credentials on the 4 devices I use for work. Shortly into the reset extravaganza I was prompted with a notification that I exceeded the number of two-factor token requests and to try again later. With only some authenticated services, mixed across devices, I was stuck for about an hour with limited access while I waited for my token pool to refresh. While not the end of the world, it is a simple example of a situation where in the future, I am highly incentivized to find any way possible to prevent a password reset from happening again.

We have all had that moment, when setting up a new account, where the password requirements drive us nuts. Make sure to have capitalized and uncapitalized characters, numbers, and special characters, and at least 8 digits long. Make sure to use different passwords for every account you own, change passwords regularly, and do not repeat between services. Passwords are just one layer of security of many, but we are all familiar with password schemes that make accessing or regaining access an absolute pain. We are all guilty of recycling passwords, using incremental digits on resets, and other workarounds to make our lives easier, however these workarounds create exploitable vulnerabilities. The weakest link in your organization is your users. Phishing scams are getting extremely good at spoofing regular communications. All it takes is a momentary lapse in vigilance, a user pressed for a deadline rushing through work, or an unaddressed application vulnerability, and your organization is compromised.

No system is 100 % secure. At best we can achieve a system that is more effort to breach then the potential reward. Security can be thought of simplistically as defense layers and partitions. A password is a layer and what that credential has access to is the partition. The more layers of defense, the harder it is for an attack to be successful; this is why two-factor authentication is so important and it stops 99 % of attacks. The better partitioned users are, the less damage can be done. Limit access to only the data and administrative control employees need and nothing beyond.

Security by design is critical. Security needs to be part of the development process instead of a feature added after the fact. Additional layers of security “after the fact” is like adding locks to a cheap door, more add-on locks means users of the cheap door need more keys, need more time to unlock, and despite the extra locks, the door will be kicked in anyway.

Useability is as much dependent on culture as it is on the design of the system. Consider a culture where the users view the security features like multi-factor authentication as a chore, view shared folders as the best way to manage their work, see user roles as a hassle or a roadblock to productivity, and are wary of software updates for fear of breaking something. Designing a system for users in this category would be challenging to say the least. Part of this problem can be solved with training and awareness; however, making security a priority is the task of your organization’s leadership to both prioritize security implementations and strongly communicate the value. Secondly, security is not a set and forget system, one must regularly review policies and implement changes as required. Security vulnerabilities are much like technical debt, but instead of the cost compounding over time, the cost of security debt is asymmetric with risk, where small increases in risk may have massive costs with a breach.

Security and useability may seem at odds with each other, but they are in fact core considerations when developing software or managing a technical environment. As people are the greatest vulnerability, equally considering culture, training, and the technical environment will yield far greater results than just technical improvements alone.